A Science of Economies?

from Geoff Davies

Debates about whether economics is or can ever be a science appear frequently on this site, such as making economics a relevant science. Perhaps more in the subsequent comments than in the articles themselves, there are some recurring confusions and misconceptions, such as whether mathematics should be involved, about what the role of mathematics might be, about “prediction” as a necessary part of a science, about the role of assumptions and approximations, about whether any study involving people can ever be a science and, fundamentally, about what science really is.

I have commented in passing on this topic before, for example here, but in this comment I’d like to offer a more focussed discussion.

My day job has been studying the Earth, using physics. This has taught me some distinctions between science and not-science, and it has led me to expand my view of what science might encompass. Here are a few points.

The Earth is rather messy (though not as messy as people), so one has to learn to sift carefully through many observations, that are often confusing, to find a nugget of insight.

The deep interior of the Earth, my subject, is remote from direct observation, so one inferswhat might be down there, from indirect observations made at the Earth’s surface. This led me to realise that all observations, in any field, are interpreted through such inference. The notion of a “fact” becomes slippery. So I talk about observations, not facts.

One cannot experiment with the Earth, one can only observe the effects of “natural experiments”. The goal is always to figure out how the Earth got to its present condition.

It is also a historical science, so one has to be careful what one means by prediction from a theory, and perhaps to use a different expression: deducing the implications of a theory.

Good science does not have to involve complicated mathematics or high precision. The precision required of measurements or calculations depends on the context. Sometimes a very rough measurement or estimate can yield a crucial insight, whereas other times an elaborate and high-precision measurement or calculation might be called for. The mathematics involved might be simple, or highly sophisticated, or even just logical relationships: before, after, bigger than, smaller than.

Science can be presented as a two-stage process: induction from observations to form a hypothesis, then deduction from the hypothesis and comparison with more observations, totest whether the hypothesis is consistent with what we can observe.

Although I have seen induction presented as though it were a logical process, it is not. It is a process of recognition, or cognition. It is the perception of a pattern of some kind in the available observations: certain animals are seen migrating and soon after the weather turns colder; the sun rises at regular intervals from a certain part of the horizon; high levels of debt tend to be followed by a market crash.

The description of such a perceived pattern, or of what might cause the pattern, comprises a hypothesis: some animals move to warmer places ahead of winter; the sun goes round the Earth at a steady rate on a regular path; debt higher than certain levels becomes unstable.

One can then deduce implications of the hypothesis: one ought to be able to find the animals living in a warmer place during winter; the sun will rise at a certain time and place within the next 24 hours; one ought to be able to model a financial market crash in terms of a debt overshoot-and-collapse process, analogous to population dynamics.

Having drawn out implications (or made “predictions”), one can test them against additional observations: journey to warmer climes and look for the animals in winter; wait for another sunrise; see if the rate of loan defaults rises as a crash begins.

defaults rises as a crash begins.

With this brief sketch of the scientific process, we can clarify a few things. One, commonly noted, is that there may be more than one hypothesis. Perhaps the Earth tumbles headlong while the sun stays still. One might then wonder why we don’t fall off. (Whether the Earth circles around the sun is not relevant here, as I’m addressing a daily regularity, not an annual regularity.) So hypotheses may compete and one may get displaced. Lo, these days we think the Earth tumbles headlong, how crazy are we?

Some hypotheses may be superseded but not completely abandoned. Thus Newton’s theory of gravity still works very well for many situations, even though Einstein’s works more accurately and for a wider range of situations. Note also that these two theories start from entirely different conceptions: force acting at a distance (Newton), versus variations in the local rules of geometry (Einstein).

The Newton-Einstein comparison also shows that science is not about proof, or Truth. Einstein’s theory in turn may be superseded, so we can’t assume it is the Truth. Newton’s theory is still very useful, so it is not sensible to say it is “wrong” or “disproven”. It is less useful than Einstein’s. The criterion is whether a theory is a useful guide to a given situation.

Another thing to notice is that we use an informal version of the scientific process every day, to deal with life’s little challenges and mysteries: the traffic seems to jam up briefly at a certain place and time – perhaps a nearby factory closes at that time. Science, I think, is just a refined and systematic version of this everyday process. Science is not just about subjecting things to violence in a laboratory.

Nor is science about precision, it is about understanding. There is a fairly simple formula for the amount of heat a fluid will carry by convection, given its temperature. I once used this formula to estimate that an ocean of magma 100 kilometers deep would solidify within about 10,000 years. (Just such a magma ocean might have been formed by a giant impact on the early Earth, that also resulted in the Moon.) The formula is quite rough – the answer might be 1,000 years or 100,000 years. But the answer is unlikely to be 10 years or 10 million years. As it probably took the Earth around 50 million years to grow to its final size, this tells us the magma ocean was a transient state, not one that persisted through the late growth of the Earth. So even if the estimate is uncertain by one (or even two) orders of magnitude, we still learn something important about the early state of the Earth.

a fluid will carry by convection, given its temperature. I once used this formula to estimate that an ocean of magma 100 kilometers deep would solidify within about 10,000 years. (Just such a magma ocean might have been formed by a giant impact on the early Earth, that also resulted in the Moon.) The formula is quite rough – the answer might be 1,000 years or 100,000 years. But the answer is unlikely to be 10 years or 10 million years. As it probably took the Earth around 50 million years to grow to its final size, this tells us the magma ocean was a transient state, not one that persisted through the late growth of the Earth. So even if the estimate is uncertain by one (or even two) orders of magnitude, we still learn something important about the early state of the Earth.

On the other hand, if one wants to test the “standard model” of elementary particles one must calculate things to many decimal places, because it has (so far) been found to fit observations to such levels of accuracy.

Science is not the same as mathematics. To a scientist, mathematics is a tool. More accurately, it is a toolkit of previously worked-out logical deductions and procedures. It helps in the deduction stage of science, when one wants to know the implications of one’s hypothesis. Mathematics is not science, it is an amazing collection of logical structures. Presenting a lot of mathematics does not mean one has done science. It means one has done a lot of logical relating among abstract things, but it may have little or no similarity to any observations of the world.

This is a central confusion of neoclassical economists. For over a century they seem to have thought that doing clever and rigorous mathematics is science. It is not. In fact it is pathetically easy to point out ways in which observable modern economies are quite inconsistent with the neoclassical theory (market crashes, fashion, advertising, oligopolies, technological lock-in, … ). Neoclassical economics can only be called pseudo-science.

One can do little or no mathematics and still be doing good science. One can do very elaborate mathematics and still not be doing science. The sophistication and precision of the mathematics you might use depends entirely on the question being addressed. Thus the use of mathematics, or not, is not central to the question of whether we can do science while studying the behaviour of economies.

Can we study people scientifically? This tends to be a fraught issue, because science, to many people, means laboratories, dissection, rigid “laws”, inexorable mechanisms, lack of free will, and so on. That is one kind of science, called reductionist science, the kind that pulls things apart, puts them back together and predicts the inexorable course of the “clockwork” universe, the science of Descartes and Newton. Reductionist science has been brilliantly successful at understanding many (but not all) inanimate systems. It has had much less “success” studying living systems. If you dissect your frog, it is dead. If you treat people as calculating automatons, you kill society – take a bow, Hayek and Thatcher.

However a new kind of science has emerged over the last half-century or so. It is systems science, the study of systems with many interacting parts, and it has been demonstrated that wholly new behaviours can emerge in the whole system, behaviours that isolated parts of the system cannot do. A stadium crowd can make a “Mexican wave”, but you alone cannot. This kind of science is not reductionist, it is holistic – the whole is greater than the sum of the parts, and only by studying the whole can it be fully understood. That’s why you miss important things about frogs when you dissect them.

Commonly, many “self-organising” systems exhibit rather irregular or unsteady behaviour. However many of them also reveal readily perceptible regularities. This is certainly true of living systems. No two trees are the same, but trees of a given species still have quite recognisable similarities. Dogs and children run and leap in erratic ways, but we still know they have recognisable kinds of behaviour.

So, can we study people, and societies, and economies? We can if we bear in mind there is always a balance of similarity and difference. No two situations are identical, but many are similar. There would be no discipline of history if we never perceived similarities in past situations. History would just be chronicles, or, in the irreverent caricature, “just one damn thing after another”. I won’t insist that history is science, but I invite you to consider how well it might fit the description of science I gave earlier.

I do not mean any hubris here. Rather the opposite. The study of living systems requires humility. Whether one calls it social science or history, one has always to qualify the patterns one thinks one perceives, to remember that tendencies are not rules or “laws”, and to be conscious of the limitations of one’s knowledge.

Of course scientists in any discipline should always be alert to the limitations of their knowledge, and in healthy disciplines scientists keep each other honest by deflating each other’s excesses of enthusiasm. This is not an automatic process, however. Even in the physical sciences there is a tendency for groups to form among people who reinforce each other’s prejudices, leading even to the emergence of “schools”. Still, if evidence is diligently sought, it ought to be possible eventually to decide which school gives the more useful guidance. Unfortunately our tendency to such tribalism does interfere with science. The neoclassical tribe seems to have out-done all others by a long way.

The upshot of this discussion is that a science of economies is quite conceivable, provided we are sufficiently diligent, sufficiently patient, sufficiently alert to the limitations of what we think we know, and sufficiently alert to the traps. In particular, we must maintain a regular iteration between observation and hypothesis. If observations seem to be consistent with our hypothesis we may provisionally retain it. If observations seem to be inconsistent with our hypothesis, we must be willing to abandon the hypothesis. This is the great failing of neoclassical economics, that it became an infatuation with a result, the fictitious general equilibrium, instead of a scientific enquiry.

By the way I have personal experience of a situation where there seemed to be an inconsistency with observations, but more accurate calculations revealed that the models did in fact reproduce the observations. Thus we must not be too simplistic in applying Popper’s criterion, that to be scientific a hypothesis must be falsifiable. In complicated situations (like economies) establishing “falsification” may not be a simple exercise.

revealed that the models did in fact reproduce the observations. Thus we must not be too simplistic in applying Popper’s criterion, that to be scientific a hypothesis must be falsifiable. In complicated situations (like economies) establishing “falsification” may not be a simple exercise.

In economics the issue of assumptions and approximations is particularly vexed, largely it seems because in 1953 Milton Friedman presented an influential discussion of them1. Unfortunately Friedman was so hopelessly muddled that he is often paraphrased as saying that the best theories will have wildly unrealistic assumptions, or that assumptions don’t matter at all so long as one’s theory yields some passing resemblance to some observations. That is just nonsense.

Assumptions matter. If one’s assumptions imply near-equilibrium, then one’s theory will never be able to reproduce a market crash. If you assume the economy is close to optimal, then any attempt to reduce greenhouse gas emissions will move it from the optimum and therefore seem expensive, even though efficient and inexpensive technologies are available. (Fortunately many economists have moved on from that argument, notably Nicholas Stern of the UK.)

These issues can be illuminated through an example: a rather simplified approach to modelling a market crash, based on the idea that a crash is caused by an excess of debt. A simple way to model this is to assume there is a comfortable level of debt an economy is capable of carrying, a “carrying capacity”. Higher levels of debt cause defaults that tend to reduce the level towards the carrying capacity. Lower levels of debt allow for debt to increase.

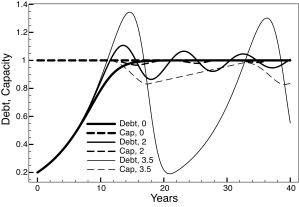

Figure 1 is from my recent book Sack the Economists. The thick curve shows the level of debt, relative to the carrying capacity, rising from low levels and smoothly approaching the optimum level. This curve was calculated by assuming debt increases by 15% per year at low levels, but the rate of increase reduces as the debt approaches the optimum, becoming zero at the optimum.

Figure 1 Overshoot-and-crash models of debt.

The latter feature was implemented by including a factor that depends, nonlinearly, on the gap between actual debt and optimal debt. This factor multiplies the basic expression for the rate of increase in debt. It expresses the feedback of the market: while debt is low it is safe to add more. As debt gets higher, adding more debt becomes riskier, so it is better not to issue more loans . When everyone is well-behaved, the result is a steady state in which the rate of issue of new loans balances the rate at which loans are paid off.

. When everyone is well-behaved, the result is a steady state in which the rate of issue of new loans balances the rate at which loans are paid off.

However if banks and customers are reckless, a different sequence may ensue. Suppose the market feedback is ignored for a time, until it becomes too obvious to ignore any more. Suppose in fact that the market feedback is delayed by 3.5 years. In the calculation, the feedback factor depends on what the (debt – optimum) gap was 3.5 years earlier. The result is shown by the thin curve – debt rises rapidly past the optimum, peaks 3.5 years later, then plummets. Now, belatedly responding to defaults brought on by the excess debt, debt undershoots, plunging back to very low levels. It then takes a long time to recover, but then almost the same sequence ensues.

If the delay in feedback is intermediate, 2 years, the medium curve results – debt overshoots moderately, then undershoots moderately, then continues to oscillate about the optimum. This bears an interesting resemblance to the “business cycle”.

Obviously this is a simplified model, and it depends on assumed relationships among aggregate variables – level of debt, optimal debt, delay in feedback and so on. Nevertheless I contend it is a useful model, a useful collection of hypotheses, because it yields not only something resembling a market crash, but also, with a slightly different setting, something resembling a business cycle, and even a steady state. It is also a testable model. Both the result and the assumed relationships can be compared with more detailed information. If it should survive such tests, it has some potential to be refined into a useful model of macro-dynamics.

If you think this model is too contrived, check out the model proposed by Eggertsson and Krugman2. They constructed before-and-after equilibrium models, and they simply decreed that the level of debt was lower in the second model than in the first. I don’t object to such contrivance on principle, but I do note that it involves even less insight into debt markets than my model, so it is even cruder in that sense. Their model is also open to criticism because it assumes the high and low debt states to be at equilibrium, but those are probably the times when the system is furthest from equilibrium, as is explicit in my model.

If the E-K model gives any insights into the lead-up to and aftermath of a crash, they will be limited. In particular it is not capable of addressing the imbalances that led to the crash. If it were to be any use at all, it would have to be to times well before and well after the crash, if one could argue the imbalances were small at those times, and even that is a dubious proposition given all the instabilities that can readily be identified in a modern economy. Steve Keen has challenged several of the more detailed arguments made by E and K.

Thus the E-K model has elaborate mathematics but crude assumptions. The elaborate mathematics do nothing to overcome the limitations inherent in the assumptions. My model uses much simpler mathematics (one first-order differential equation in time) but more sensible assumptions. A fundamental difference is that in my model time is allowed to flow, whereas in neoclassical models it is not.

equation in time) but more sensible assumptions. A fundamental difference is that in my model time is allowed to flow, whereas in neoclassical models it is not.

What Friedman was probably groping for, in his muddled 1953 paper, was the notion of a first approximation, commonly used in science. When you don’t understand much about a system, it is sensible to try some quite simplified hypotheses to see how far they will get you. Thus you might suppose, as a first approximation, that the sun moves steadily around a great circle path every 24 hours. That gets you the basic phenomena of night and day, and the sun rising more-or-less in the east.

With that success you might add a second approximation – that the sun’s path oscillates slowly north and south over the course of one year. This was the approach used by Ptolemy and his forebears. After they added third-approximation epicycles and so on it yielded passably good predictions of the sun and planets, though there were still discrepancies and you had to assume co-incidences among various elements.

On the other hand Copernicus (and his ancient Indian and Greek forebears) started with a different first-approximation – that the Earth moves round the sun once per year, spinning on a tilted axis as it goes. This gets you a similar level of accuracy more economically, in other words with fewer assumptions and arbitrary elements.

However it is from Kepler’s proposal of elliptical orbits, and then Newton’s proposal of an inverse-square attracting force of gravity that a really economical description emerges. You might say that Newton’s version is a powerful first approximation. It permits second- and higher-approximations too – to include the effects of the mutual attractions among the planets.

All theories are approximations of some kind, it is impossible to include all the influences of the universe on our system of interest. The art of a good approximation is finding one that gives you the most useful descriptive power while still being mathematically tractable. It is certainly not true that a wildly inappropriate assumption might still get you a good theory, and it is just false to claim assumptions don’t matter at all.

So how do we go about building a “scientific” understanding of economies? We start where we think we see a pattern, and we try to model a cause of such a pattern. We don’t try to do the whole job in one go, trying to create a comprehensive model. My model of debt overshoot and crash addresses only one aspect of an economy, though it is an important aspect. As different aspects of economies seem to be understood, we look for ways to bring them together into more integrated and more general models.

I have argued elsewhere, and in Sack the Economists, that economies are far-from-equilibrium self-organising systems, wild horses compared with the gentle rocking horse of the neoclassical theory. As such, our understanding of them is still in its early stages. Nevertheless there are many useful pieces already available. Steve Keen3 has pioneered the kind of dynamical macro-modelling of which my model above is a rather simple example. There have been studies of aspects of complex behaviour within economies, often starting from “micro” interactions, surveyed by Eric Beinhocker4. Parts of various economic “schools” may become incorporated, such as the role of institutions, the roles of human behaviours (though not the versions shoe-horned into the neoclassical framework), and so on.

Those who pine for a micro-based comprehensive theory must be patient. In time micro approaches of the kind described by Beinhocker might begin to outline such a theory, but in self-organising systems the macro phenomena are emergent, and their form is often not at all obvious from the specification of micro interactions.

The goal is not so much a comprehensive model as it is to build understanding. That understanding may or may not reach a level in which plausible projections into the future might be made – predictions in the usual sense. In the meantime we will be gaining more reliable insights into important parts of economies than are available from the misguided neoclassical approach.

We must also to maintain a regular iteration between models and observations, discarding models if they seem incapable of accommodating observations. Above all we must retain some humility, and bear in mind our understanding is always limited. There are two fundamental limitations on what we can hope to understand. First, an economy is such a complicated and changeable beast that we can never know all its details. Second, complex systems are intrinsically unpredictable in their details (like dogs and children), so we must allow that we can only try to understand broad trends in behaviour. Together, these limitations mean we bear in mind that there is a balance of similarity and difference – similar situations may recur, but no two situations will be identical.

Perhaps one measure of progress in real understanding would be the falling away of the messianic ideologies and doctrinaire proclamations with which the subject has been burdened for too long.

Bibliography

1 Friedman, M., The methodology of positive economics, in Appraisal and Criticism in Economics: A Book of Readings, B. Caldwell, Editor. 1953/1984, Allen and Unwin: London.

2 Eggertsson, G.B. and P. Krugman, Debt, Deleveraging, and the Liquidity Trap: A Fisher-Minsky-Koo Approach. Quarterly Journal of Economics, 2012. 127: p. 1469-1513.

3 Keen, S., Debunking Economics: The Naked Emperor Dethroned? Second, revised and expanded ed. 2011: Zed Books.